List of Contents

What is the AI Processor Market Size?

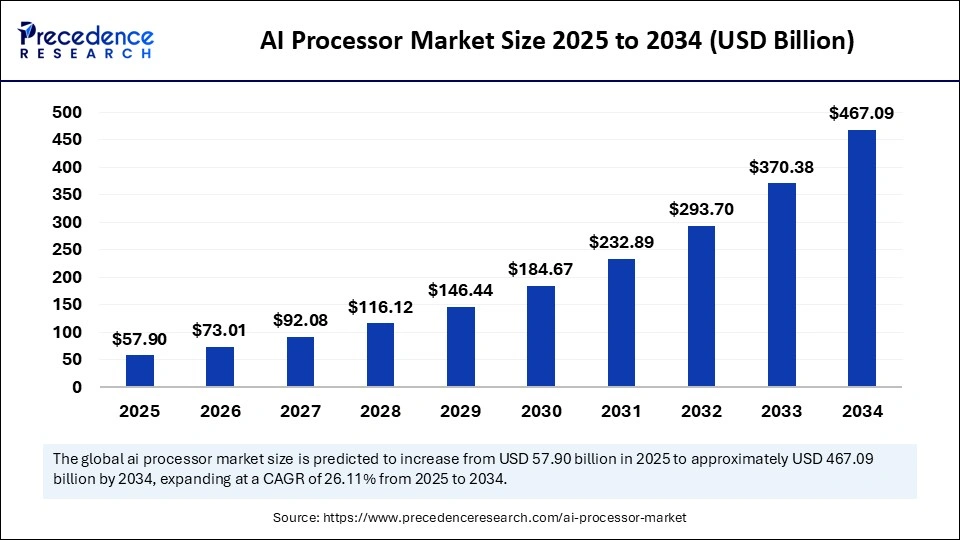

The global AI processor market size is calculated at USD 57.90 billion in 2025 and is predicted to increase from USD 73.01 billion in 2026 to approximately USD 467.09 billion by 2034, expanding at a CAGR of 26.11% from 2025 to 2034. The AI processor market is driven by rising adoption of AI technologies across industries, increased data processing needs, and advancements in chip architectures.

Market Highlights

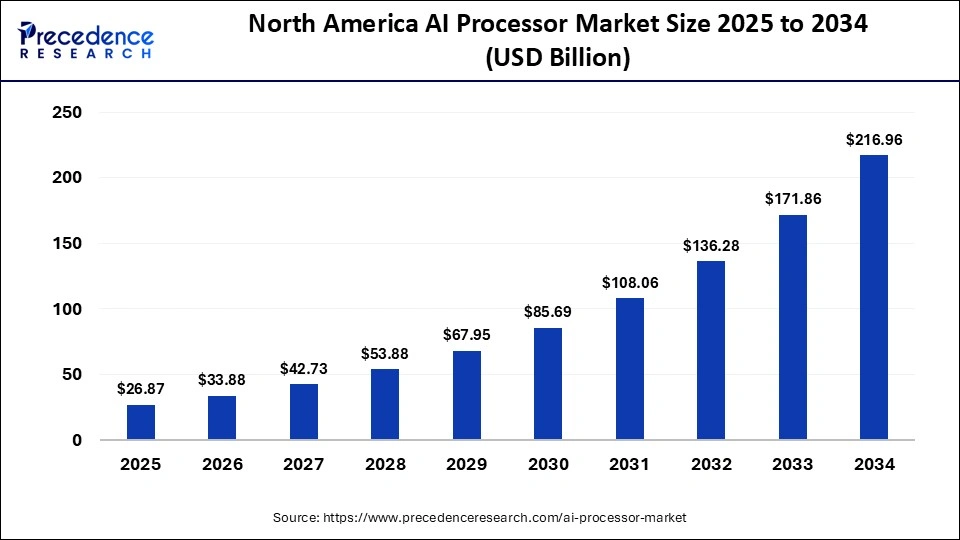

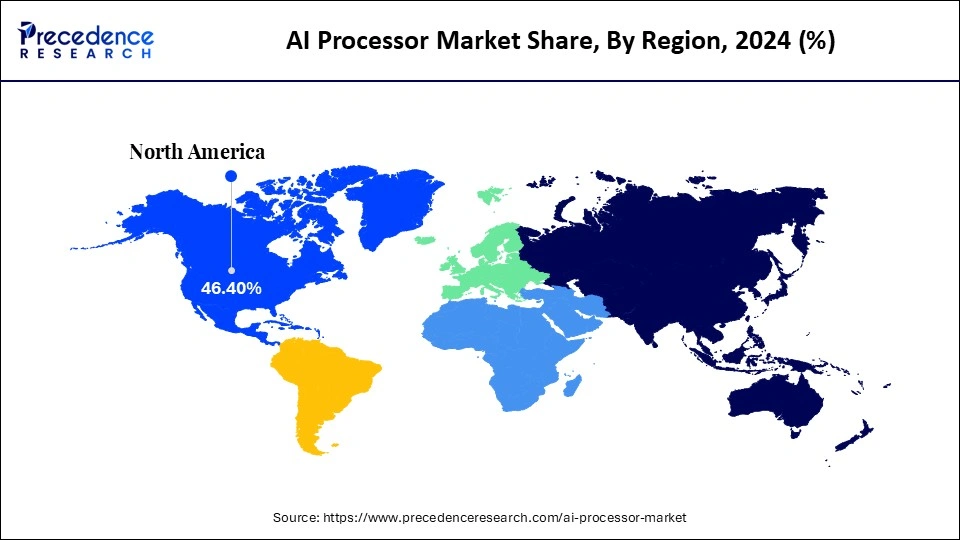

- North America led the AI processor market with around 46.4% of the market share in 2024.

- Asia Pacific is expected to expand the fastest CAGR in between 2025 and 2034.

- By processor type, the GPU (graphics processing unit) segment held approximately 35.4% of the market share in 2024.

- By processor type, the NPU (neural processing unit) segment is growing at a double-digit CAGR of 21.5% Between 2025 and 2034.

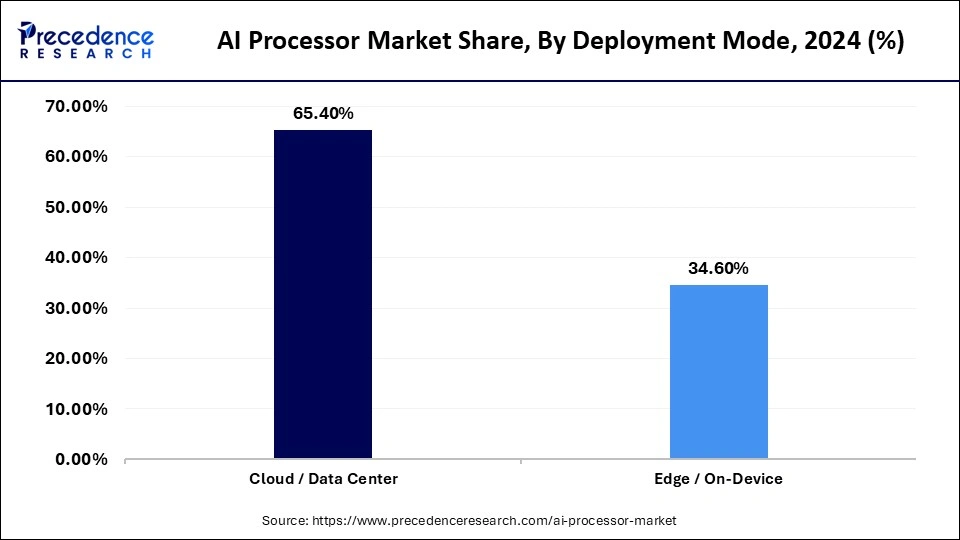

- By deployment mode, the cloud / data center segment captured the highest market share of 65.4% in 2024.

- By deployment mode, the edge / on-device segment is expanding with the highest CAGR from 2025 to 2034.

- By application, the consumer electronics segment held the major market share of 37.4% in 2024.

- By application, the automotive segment is poise to grow at a notable CAGR between 2025 and 2034.

- By end-user industry, the IT & telecom segment captured the biggest market share of 34.4% in 2024.

- By end-user industry, the automotive & industrial segment is expected to expand at a notable CAGR from 2025 to 2034.

Powering the Future: The Surge of AI Processors

More and more applications of artificial intelligence are found in many industries self-driving vehicles, smart devices creating more demand for faster performing processors at lower power levels.AI processors are developed systems that focus on specialized chips to accelerate specific AI workloads for example, machine learning and deep neural net algorithms as a category of processor that can allow data to traverse through the computer faster allowing for real-time decision making.

The demand is being fueled by increased use of AI in the cloud, rising trends in edge computing, and greater complexity in AI models. As top companies build systems for AI-enabled process automation and intelligent analytics, these processors have facilitated the digital transformation objectives of many segments of the commercial ecosystem, driving higher computing performance across sectors.

Key Technological Shifts

Transforming Computational Power: New Developments in the AI Processor Market

The AI processor market is experiencing rapid growth driven by the need for increased computational speed, lower latency, and greater efficiency in AI-based applications. New generation chip architectures, such as neuromorphic and heterogeneous structures, have radically changed processing performance by simulating the human brain and performing multiple tasks at high speeds. Such developments support edge devices to process complex, AI-heavy workloads directly, rather than relying heavily on cloud-based processing.

In addition, leading technology firms are using advanced nodes, such as 3nm and 5nm fabrication technologies in chips to manage performance with lower power requirements for the application. Specialized chip accelerators for AI and quantum processing integration, along with chiplet-based modular architectures, further change the market terrain. When combined with AI, high-bandwidth memory (HBM) and new interconnects prepare the field for expanded possibilities in autonomous vehicles, robotics, and data centers. Overall, these developments prepare for a new age of intelligent, efficient, and adaptive computing systems.

Market Trends

- Edge AI Surfaces to the Forefront: AI processing is advancing from centralized clouds to the edge of devices, where latency is low and performance is fast. Demand for chips that handle real-time, on-device intelligence effectively is being driven by smartphones, autonomous cars, and Internet of Things (IoT) systems.

- Custom Chips Redefine Performance: Tech giants are creating domain-specific processors that replace traditional graphics processing unit (GPU). Technologies such as neural processing units (NPUs) and application-specific integrated circuits (ASICs) can optimize speed and energy use, and give businesses a competitive advantage in AI workloads focused on specialized tasks, like vision and speech.

- Efficiency Becomes the New Metric: Manufacturers are repurposing engineering to improve performance-per-watt and reduce hardware costs. Energy-efficient processors are dominating product roadmaps as sustainability goals and operational cost reductions rise to the top of AI hardware deployment priorities.

- Governments Establish Independence from Chips: In light of worldwide chip supply issues, countries are investing heavily in locally producing AI processors. National initiatives include self-sufficiency, data sovereignty, and the development of domestic semiconductor ecosystems that are independent of other countries.

- Training and Inference Converge: The distinction between training chips and inference chips is becoming less distinct. Much new architecture deliberately balances training and inference capabilities to support scalable deployments of large models across sectors such as health, finance, and automotive.

AI Processor Market Outlook

With the growth of specialized compute applicable to machine-learning workloads, the AI-processor market is also forecasted to experience substantial growth; one market report estimates that AI processors CAGR will be 17% from 2023 to 2030, with the entire AI processor market significantly larger than that.

The demand is increasingly rapidly on a global scale, including Asia-Pacific, due in part to scaling digital infrastructure/edge AI use-cases; new government AI-missions, cloud build-outs/schemes, and data centre investments are expected to facilitate this growth outside traditional major markets, such as the U.S./Canada & European Union.

Further government investment in AI R&D has increased over 17 times in a range from USD 207 million to USD 3.6 billion, which reflects increased upstream investment in AI hardware, architectures, and algorithms, which are essential to develop the AI processor market.

As AI processors become essential for hardware designs and architectures that will enable better performance and energy efficiency to accelerate edge and cloud applications, hardware/technology vendors have focused attention on low-power processor designs and optimized AI architecture as an essential field of research related to sustainable scaling of compute infrastructure.

The major drivers of AI processor growth are; widespread enterprise AI adoption (e.g. generative AI, edge inference), increasing demand for low-latency and domain-specific processing, and significant usage of compute infrastructure investment and associated ecosystem support from both large government and hyperscale networks.

The constraints to growth in the space include: increasing complexity of chip design, less stability in the supply chain of technology, increasing costs of fabrication and packaging, and a shortage of skilled hardware engineers that would enable the acceleration of AI.

Market Scope

| Report Coverage | Details |

| Market Size in 2025 | USD 57.90 Billion |

| Market Size in 2026 | USD 73.01 Billion |

| Market Size by 2034 | USD 467.09 Billion |

| Market Growth Rate from 2025 to 2034 | CAGR of 26.11% |

| Dominating Region | North America |

| Fastest Growing Region | Asia Pacific |

| Base Year | 2025 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Processor Type, Deployment Mode, Application, End-User Industry, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

AI Processor Market Segment Insights

Processor Type Insights

Graphics Processing Units (GPUs) hold a dominant 35.4% share of the AI processor market. The enormous parallel processing capability of GPUs makes them perfect for deep learning, computer vision, and the generative AI workloads that we see today. They continue to serve as the processing foundation for AI workloads in data centers and large cloud environments, providing performance and scalable processing power.

Neural Processing Units (NPUs) is the fastest-growing segment designed solely to accelerate machine learning tasks. NPUs are effective because they process many matrix operations while consuming very little power. NPUs are essential for real-time AI inference on mobile, IoT, and embedded applications by supporting on-device intelligence and will continue to be embraced across several industries.

Central Processing Units (CPUs) are an integral part of all AI workloads as a base processing layer. While CPUs are not specialized processors like GPUs or NPUs, they serve a critical function in system-level control, data orchestration, and distribution of workloads among the AI architecture. CPUs remain vital processing solutions in hybrid computing and edge environments.

Deployment Mode Insights

Cloud and data centers lead the AI processor market with a 65.4% share, due to their ability to train high-volume AI models and run complex analytics. These infrastructures are centralized, provide scalable storage, advanced GPUs, and multi-tenant AI workloads, and allow organizations to simplify the process of developing and deploying AI.

Edge and on-device deployments are the fastest-growing deployment modes as organizations seek low-latency inference and in-the-moment decision-making. With smart devices, autonomous systems and IoT sensors adopting AI applications locally, edge processors in devices provide quick resolutions while limiting reliance on cloud services.

A hybrid AI system is evolving to be recognized as such a system that employs both cloud and edge deployment. Hybrid systems allow cloud-based continuous learning, while also deploying tasks in real-time at the device level, achieving an efficient balance with performance, efficiency and data security.

Application Insights

The consumer electronics category has the largest market share, at 37.4%, due primarily to the integration of AI into smartphones, wearables, smart TVs, and personal assistants (e.g. Google‟s Assistant, Apple‟s Siri) that rely on AI processors for enhancing the user experience, image recognition, understanding of speech, smart optimization of performance, and product recommendations.

The automotive industry is considered to be the fastest-growing application category adopting AI processors for advanced driver-assistance systems (ADAS), and for autonomy and energy optimization in electric vehicles. The advent of smarter vehicles means that AI chips will play a significant role in the number of real-time information sources and ultimately in sensor fusion and decision-making roles.

Healthcare applications are gaining traction as AI processors are increasingly capable of faster image analysis time, disease detection and interpretation of medical data. AI chips have been shown to aid in supporting diagnostics decisions, robotic surgery and analytics for personalized treatment in rapidly evolving modern medical ecosystems.

End User Insights

The IT and telecom segment leads the end-user space, accounting for 34.4% share, driven by rapid data generation and the use of AI in network optimization, cybersecurity, and data analytics. AI processors provide carriers with enhanced ways to deliver services and can automate the management of infrastructure.

The automotive and industrial end-users are the fastest-growing segments, with corresponding applications driven by automation, predictive maintenance, and autonomous systems. AI chips are being incorporated into manufacturing lines and areas of industrial robotics to improve precision, efficiency, and timely data-based decisions.

Consumer electronics remains a leading and developmental area of AI processor use, supporting smart connectivity, driven interaction, and live performance tuning of companies products that are launched each year, from home automation systems to state-of-the-art entertainment platforms.

AI Processor Market Regional Insights

The North America AI processor market size is estimated at USD 26.87 billion in 2025 and is projected to reach approximately USD 216.96 billion by 2034, with a 26.12% CAGR from 2025 to 2034.

Why Does North America Maintain Its Market Leadership?

Much of North Americas positioning is based on hyperscale demand, historical AI research and development intensity, and access to innovating IP owners (chip designs, frameworks, and large AI model labs). Cloud providers continue major investments in AI clusters and accelerators, driving enterprise purchase cycles and changing the market with custom-built ASICs and tensor cores. North Americas strength lies in demand density (both data centers and enterprise AI) and venture funding flowing into edge and accelerator startups, creating a virtuous cycle between software models and bespoke silicon.

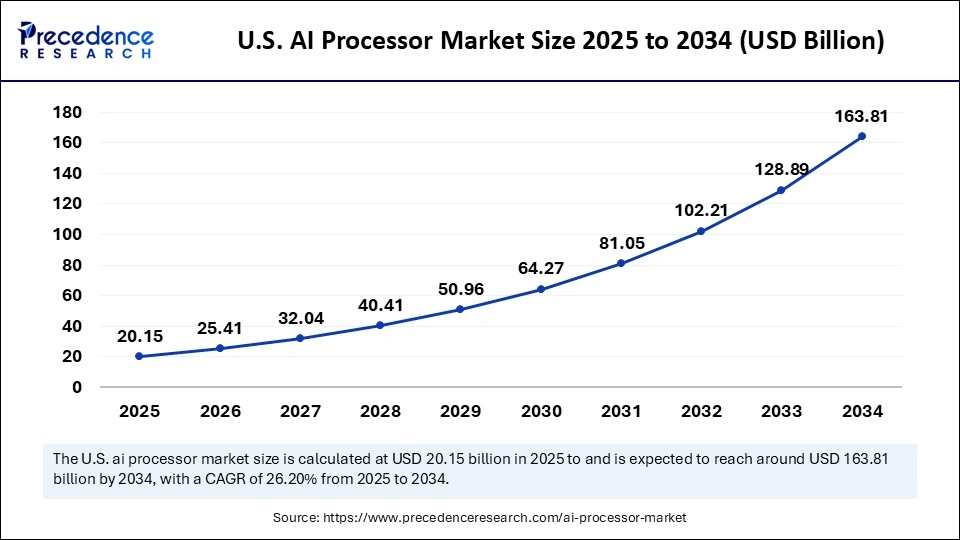

The U.S. AI processor market size is calculated at USD 20.15 billion in 2025 and is expected to reach nearly USD 163.81 billion in 2034, accelerating at a strong CAGR of 26.20% between 2025 and 2034.

U.S AI Processor Market Trend

The U.S. has deep chip design shops, the largest cloud providers, and federal and industry-sponsored coordination for semiconductor resiliency, which reduces time-to-market for next-gen AI processors. The purchase decisions of hyperscalers (with co-design of their accelerators) determine the architectures that vendors prioritize, while collocated ecosystems of AI software enhance integration as well as tuning performance and support lifecycle. The U.S. is an innovation-to-deployment engine for AI silicon.

In October 2025, Apple unveiled the M5 chip, marking a major leap in AI performance for Apple Silicon. The processor delivers faster on-device machine learning, advanced energy efficiency, and enhanced capabilities across Mac and iPad devices.

The rise of Asia Pacific is based on improvements in on-prem and cloud AI, increasing foundry capacity, and national industrial strategies for semiconductor autonomy. Supply-side improvements (local fabs and yield improvements), coupled with large domestic cloud and consumer AI requirements, are compressing scaling times for sub-regional chip makers and OEMs so adoption curves are steepening rapidly across cloud, telecom, and edge applications. Competitive manufacturing environments and government incentives are accelerating capacity expansion and verticality across AI stack solutions.

China AI Processor Market Trend

China ecosystem includes a mix of large cloud players, strong end-market demand, and high velocity of domestic AI chip production and yield improvements. Local China-based firms are building on maturity in silicon design and local policy, and on capital support for local fabs and model development, decreasing import reliance and facilitating large-scale local deployments for inference and training. These factors combine demand, the manufacturing ramp, and the policy vector to have an outsized effect on China and the Asia-Pacific growth potential.

In May 2025, Nvidia introduced a low-cost AI chip designed for China to comply with U.S. export restrictions. CEO Jensen Huang emphasized maintaining market presence amid ongoing U.S.-China trade tensions affecting advanced semiconductor exports.

Europe is becoming an important growth area in the AI processor market, fueled by the digitalization of industry, sustainable semiconductor production, and government-supported initiatives. The European Chips Act and a host of funding programs are helping countries attract semiconductor investments, creating a sense of regional autonomy in chip design and manufacturing. Much of the demand is driven by the integration of AI in automotive, industrial automation, healthcare and telecom markets where data privacy and energy efficiency are prioritized.

Germany AI Processor Market Trends

Germany is the center of AI semiconductor deployment in Europe, driven by its strong automotive and industrial base. Major automotive manufacturers and Tier-1 suppliers are making significant investments in AI-enabled manufacturing processes and autonomous vehicle technology, which is driving demand for a high-performance, low-latency processor. Additionally, the wealth of German research institutes and chip developers is collaborating on energy-efficient architectures and edge-AI for industrial robotics. The interplay of industry expertise, R&D capability, and national semiconductor strategies positions Germany as the model for Europes AI hardware industry, setting the stage for AI hardware adoption across the region.

The Middle East & Africa (MEA) has a rapidly growing AI processor market, driven by extensive digital transformation initiatives, overarching national AI strategies, and investment in smart infrastructures. Many governments in the Gulf Cooperation Council (GCC) economic region list the development of AI and semiconductors among their top priorities in national diversification programs. Initiatives like Saudi Arabias NEOM and the UAEs Smart Dubai rubber-stamp the amount of AI accelerator technology research and deployment in cloud computing, as well as AI applications in surveillance technologies and edge analytics.

UAE AI Processor Market Trend

The UAE has become a leader in the MEA AI processor market as a result of a robust policy framework, strong digital capabilities, and partnerships with global technology leaders. The success of the UAE National AI Strategy 2031 and the collaborative investments of companies like Microsoft and Amazon Web Services in data centers has been instrumental in scaling AI use cases and technology development. The drive to establish sovereign AI capabilities, coupled with its focus on opportunistic research collaborations, positions the UAE as a regional center for high-performance computing and AI innovation.

AI Processor Market Value Chain

The upstream segment provides the essential inputs and infrastructure for AI processor production. This includes semiconductor-grade silicon wafers, photolithography equipment, EDA (Electronic Design Automation) software, design IP cores, and raw materials such as rare earth metals and chemicals. Key contributors at this stage include wafer manufacturers, foundry equipment providers (ASML, Applied Materials, Lam Research), and IP licensors (ARM, Synopsys, Cadence).

Value creation is tied to technological complexity, capital intensity, and supply security. Advanced lithography (5 nm, 3 nm, and below) and EUV capabilities command significant upstream leverage. Companies that supply cutting-edge design tools and verified IP libraries for neural processing and parallel computing hold substantial bargaining power due to high switching costs and intellectual property barriers.

This is the core value-creation layer, where semiconductor design firms and foundries transform intellectual property into functional silicon. Activities include chip architecture design, simulation, physical layout, wafer fabrication, testing, and advanced packaging.

Fabless companies (such as NVIDIA, AMD, and Qualcomm) dominate chip design, focusing on architecture innovation and AI model optimization, while foundries (such as TSMC, Samsung Electronics, and GlobalFoundries) handle high-volume manufacturing. Integrated device manufacturers (IDMs) like Intel and IBM operate across both design and production.

Value is created through differentiation in computational performance, energy efficiency, parallelism, and software-hardware co-optimization. The integration of 3D stacking, chiplets, and heterogeneous computing architectures further enhances performance-per-watt ratios.

Midstream firms capture the majority of the markets profit pool due to their control over proprietary architectures, process nodes, and production capabilities. Barriers to entry are extremely high because of capital expenditure, fabrication precision, and R&D intensity.

The downstream layer focuses on system-level integration, software adaptation, and end-use deployment across industries such as cloud computing, automotive, consumer electronics, healthcare, and industrial automation. OEMs, hyperscale data centers, and edge device manufacturers integrate AI processors into servers, autonomous vehicles, robotics, and mobile systems.

Software frameworks (TensorFlow, PyTorch, CUDA, OpenVINO) and compiler optimization play a vital role in translating hardware potential into application-level performance. Value creation in this stage lies in hardware-software co-design, system compatibility, and application-specific optimization. Companies offering integrated AI hardware platforms coupled with proprietary software stacks capture recurring value through developer ecosystems and long-term support contracts.

AI Processor Market Companies

Corporate Information

- Headquarters: Santa Clara, California, United States

- Year Founded: 1993

- Ownership Type: Publicly Traded (NASDAQ: NVDA)

History and Background

NVIDIA Corporation was founded in 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem with the vision of revolutionizing visual computing. Initially recognized for pioneering graphics processing units (GPUs) for gaming and visualization, NVIDIA evolved into a global leader in artificial intelligence (AI), high-performance computing (HPC), and data center technologies.

In the AI in Processor Market, NVIDIA is the dominant force driving innovation through its GPU architectures optimized for AI acceleration. Its processors built on the CUDA programming model and enhanced with Tensor Cores are at the heart of deep learning, generative AI, and autonomous systems. NVIDIA AI chips, including the H100 and A100 Tensor Core GPUs, set the global standard for AI model training and inference across data centers, cloud environments, and edge computing applications.

Key Milestones / Timeline

- 1993: Founded in Santa Clara, California

- 1999: Launched the worlds first GPU, the GeForce 256

- 2016: Introduced Pascal architecture, enabling deep learning acceleration

- 2020: Released the A100 Tensor Core GPU for large-scale AI workloads

- 2023: Launched the H100 Hopper architecture for generative AI and LLM applications

- 2024: Announced next-generation Blackwell AI processor platform for trillion-parameter model training

Business Overview

NVIDIA designs and manufactures GPUs, AI accelerators, and computing systems that power modern AI infrastructure. In the AI processor domain, the company chips are used for model training, simulation, and inference across industries, including cloud computing, healthcare, automotive, and robotics. NVIDIA also offers complete AI ecosystems, including DGX systems, Grace Hopper Superchips, and NVIDIA AI Enterprise software, enabling end-to-end AI deployment.

Business Segments / Divisions

- Data Center and AI

- Gaming

- Professional Visualization

- Automotive and Edge Computing

Geographic Presence

NVIDIA operates globally with major offices in the United States, Taiwan, Israel, India, and Switzerland, supported by a vast network of AI research centers and partners worldwide.

Key Offerings

- H100 and A100 Tensor Core GPUs for AI model training and inference

- Grace Hopper Superchip for high-bandwidth AI processing

- NVIDIA DGX and DGX Cloud for scalable AI infrastructure

- NVIDIA AI Enterprise software stack for accelerated computing deployment

Financial Overview

NVIDIA reports annual revenues exceeding 60 billion USD, driven primarily by data center and AI processor demand. The company valuation and growth are closely tied to the rapid global adoption of AI computing.

Key Developments and Strategic Initiatives

- April 2023: Announced H100-powered DGX Cloud platform for enterprise AI

- September 2023: Partnered with major cloud providers (AWS, Google Cloud, Microsoft Azure) to deliver AI supercomputing infrastructure

- May 2024: Introduced Blackwell AI architecture featuring next-generation parallelism and energy efficiency

- January 2025: Expanded NVIDIA AI Enterprise suite for multimodal and generative AI integration

Partnerships & Collaborations

- Collaborations with OpenAI, Anthropic, and Meta for large-scale AI model training

- Strategic alliances with major cloud service providers for GPU-accelerated computing

- Partnerships with semiconductor foundries such as TSMC for advanced AI chip fabrication

Product Launches / Innovations

- H100 Tensor Core GPU (2023)

- Blackwell AI Processor Platform (2024)

- DGX GH200 Supercomputer for generative AI workloads (2025)

Technological Capabilities / R&D Focus

- Core technologies: GPU acceleration, parallel computing, AI optimization, and chip-to-chip interconnects

- Research Infrastructure: Global AI labs in the U.S., Israel, and India

- Innovation focus: Generative AI acceleration, energy-efficient AI computation, and multi-chip modular architectures

Competitive Positioning

- Strengths: Technological leadership in GPU computing, robust AI software ecosystem, and deep partnerships in AI infrastructure

- Differentiators: Integrated hardware-software ecosystem optimized for large-scale AI

SWOT Analysis

- Strengths: Market dominance in AI processors, strong developer ecosystem, and unmatched AI performance

- Weaknesses: Dependence on third-party foundries (TSMC)

- Opportunities: Expansion in edge AI, autonomous vehicles, and AI-driven enterprise computing

- Threats: Growing competition from AMD, Intel, and custom AI chipmakers

Recent News and Updates

- March 2024: NVIDIA announced Blackwell architecture enabling trillion-parameter AI models

- July 2024: Partnered with AWS for new cloud-based AI supercomputing infrastructure

- January 2025: Released updated NVIDIA AI Enterprise platform for real-time generative model deployment

Corporate Information

Headquarters: Santa Clara, California, United States

Year Founded: 1968

Ownership Type: Publicly Traded (NASDAQ: INTC)

History and Background

Intel Corporation was founded in 1968 by Robert Noyce and Gordon Moore as a semiconductor company that pioneered the development of microprocessors. Over the decades, Intel has played a central role in shaping modern computing, from personal computers to cloud infrastructure.

In the AI in Processor Market, Intel has redefined its technological roadmap to focus on AI-optimized CPUs, GPUs, and accelerators for data centers, edge devices, and autonomous systems. With its Intel Xeon Scalable Processors, Gaudi AI accelerators, and Core Ultra processors, Intel provides flexible computing platforms that enable AI inference, training, and integration into enterprise applications.

Key Milestones / Timeline

- 1968: Founded in Santa Clara, California

- 1971: Launched the first commercial microprocessor, Intel 4004

- 2017: Acquired Mobileye for AI-driven autonomous vehicle systems

- 2020: Introduced Intel Xeon Scalable processors with built-in AI acceleration (DL Boost)

- 2023: Released Gaudi2 AI accelerator for data center AI workloads

- 2024: Announced Gaudi3 platform and next-generation Xeon processors optimized for AI performance

Business Overview

Intel operates as a global semiconductor company specializing in computing and networking platforms. Within the AI processor market, Intel portfolio spans from CPUs with AI acceleration to dedicated AI chips and GPUs. Its processors are used in data centers, industrial automation, healthcare AI, and generative AI applications, positioning Intel as a key enabler of enterprise and cloud AI deployment.

Business Segments / Divisions

- Client Computing Group

- Data Center and AI Group

- Network and Edge Group

- Mobileye (Autonomous Driving)

Geographic Presence

Intel has operations in over 60 countries with major manufacturing and R&D centers in the United States, Ireland, Israel, and Malaysia.

Key Offerings

- Intel Xeon Scalable Processors with AI acceleration

- Intel Gaudi2 and Gaudi3 AI accelerators for large model training

- Intel Core Ultra Processors for edge and client-side AI workloads

- OpenVIN AI toolkit for AI model optimization and deployment

Financial Overview

Intel reports annual revenues of approximately 54 billion USD, with its Data Center and AI Group contributing a growing share due to the rising demand for AI infrastructure.

Key Developments and Strategic Initiatives

- March 2023: Released Gaudi2 accelerator supporting large AI model training

- October 2023: Expanded Xeon portfolio with 5th Gen processors integrating AI inference acceleration

- May 2024: Announced Gaudi3 for advanced AI computing and improved power efficiency

- January 2025: Introduced AI-integrated Core Ultra processors for hybrid cloud and edge computing environments

Partnerships & Collaborations

- Partnerships with AWS, Google Cloud, and Hugging Face for AI model optimization

- Collaborations with enterprises deploying Intel Xeon for AI-driven data center workloads

- Alliances with academic institutions for AI architecture research and chip design innovation

Product Launches / Innovations

- Intel Gaudi3 AI Accelerator (2024)

- 5th Gen Xeon Scalable Processor with AI Boost (2024)

- Intel Core Ultra Processor with integrated NPU for AI on-device computing (2025)

Technological Capabilities / R&D Focus

- Core technologies: CPU and AI accelerator architecture, deep learning optimization, chiplet integration, and process design

- Research Infrastructure: Intel Labs and R&D facilities in the U.S., Israel, and Ireland

- Innovation focus: Scalable AI acceleration, hybrid compute design, and energy-efficient model training

Competitive Positioning

- Strengths: Broad semiconductor expertise, diverse AI computing portfolio, and in-house manufacturing capacity

- Differentiators: Integration of AI acceleration directly into CPU and data center infrastructure

SWOT Analysis

- Strengths: Established data center presence, vertical integration, and global manufacturing footprint

- Weaknesses: Slower time-to-market compared to GPU-based competitors

- Opportunities: Expansion into AI inference at the edge and hybrid cloud computing

- Threats: Strong competition from NVIDIA, AMD, and ARM-based AI chip vendors

Recent News and Updates

- April 2024: Intel unveiled Gaudi3 AI accelerator for large-scale model training

- September 2024: Announced collaboration with Google Cloud to optimize LLM workloads on Xeon processors

- January 2025: Launched Core Ultra processors integrating NPUs for AI-driven client computing

Other Players in the Market

- Advanced Micro Devices, Inc. (AMD): AMD offers a broad portfolio of AI processors and accelerators, including the Instinct MI300X and MI300A for high-performance computing and generative AI workloads, as well as Ryzen AI 300 and Ryzen 8000 Series Processors with integrated NPUs for edge and consumer devices. The company architecture emphasizes power efficiency, scalability, and AI-optimized compute performance across data center and client platforms.

- Qualcomm Technologies, Inc.: Qualcomm powers on-device AI and edge computing through its Snapdragon Platforms for mobile, PC, XR, and automotive applications. Key technologies include the Qualcomm Hexago NPU, Adreno GPU, and Kryo/Oryon CPU, enabling real-time AI processing and energy-efficient performance for connected devices.

- Google LLC (Alphabet Inc.): Google designs Tensor Processing Units (TPUs) for data center-scale AI model training and inference and Google Tensor chips for mobile devices. Its custom silicon is optimized for deep learning, language models, and generative AI, providing vertical integration across cloud and consumer AI ecosystems.

- Apple Inc.: Apple integrates AI and machine learning acceleration into its proprietary Apple Silicon (M-series, A-series) chips, featuring Neural Engines that enhance on-device AI tasks such as image processing, speech recognition, and predictive modeling. Apple focus on edge AI and privacy-preserving intelligence supports seamless AI experiences across its hardware ecosystem.

- Samsung Electronics Co., Ltd.: Samsung develops Exynos processors and AI accelerators with integrated Neural Processing Units (NPUs) for smartphones, IoT, and edge computing. The company semiconductor division also manufactures AI chips for third parties, combining memory technology and AI compute leadership for hybrid architectures.

- Huawei Technologies Co., Ltd: Huawei produces Ascend AI processors for data centers and Kirin AI chipsets for mobile devices under its HiSilicon division. Its MindSpore AI framework integrates with Ascend hardware to deliver end-to-end AI infrastructure, positioning Huawei as a leader in AI-enabled cloud and edge ecosystems.

- IBM Corporation: IBM focuses on AI hardware innovation through its AIU (Artificial Intelligence Unit) and IBM Telum processors, designed for enterprise-grade AI inference and analytics. Its hardware and hybrid cloud solutions power Watson AI and quantum-class computing, emphasizing efficiency and reliability in business AI workloads.

- MediaTek Inc.: MediaTek integrates AI processing units (APUs) within its Dimensity chipset series for smartphones, IoT devices, and automotive systems. Its NeuroPilot AI technology enables on-device intelligence, camera enhancement, and contextual processing for consumer-grade AI experiences.

- Amazon Web Services (AWS): AWS develops Inferentia and Trainium processors to power AI training and inference within its cloud infrastructure. These chips are purpose-built for large-scale model deployment, offering cost-efficient and high-performance compute for generative AI and deep learning workloads.

- Tesla, Inc: Teslas Dojo processor is designed for AI training in autonomous driving systems, enabling high-throughput computation for vision and neural network training. The companys in-house AI chip development supports vertical integration of hardware and software for real-time vehicle intelligence.

- Broadcom Inc.: Broadcom designs custom AI accelerators and ASICs used in data centers, networking, and hyperscale computing. Its solutions optimize low-latency data movement, inference acceleration, and connectivity, serving top-tier AI infrastructure providers.

- Graphcore Ltd.: Graphcore develops Intelligence Processing Units (IPUs) purpose-built for parallel AI computation and deep learning. Its IPU systems deliver high-speed performance for training and inference, with an emphasis on data flow architecture that accelerates next-generation AI workloads.

- Cerebras Systems: Cerebras designs the Wafer-Scale Engine (WSE), the largest single AI processor in the world, purpose-built for deep learning and large language model training. Its integrated system architecture provides unprecedented compute density and bandwidth, reducing training time for massive AI models.

Recent Developments

- In October 2025, Intel announced plans to launch a new AI chip to rival Nvidia and AMD. The company highlighted its unique architecture focused on real-time AI processing efficiency and broad enterprise and consumer applications.(Source: https://timesofindia.indiatimes.com)

- In June 2025, Samsung launched the Galaxy Book4 Edge AI PC in India, featuring Qualcomms Snapdragon X processor and Microsoft Copilot. The device redefines AI-powered productivity with intelligent task management and energy-efficient performance.(Source:https://news.samsung.com)

- In September 2025, SiPearl introduced the Athena1 processor, its first high-performance European microchip. Designed for supercomputing and AI workloads, it aims to strengthen Europe technological independence and energy-efficient computing capabilities.(Source: https://www.hpcwire.com)

- In July 2025, Ambient Scientific launched an AI-native processor for edge devices. The chip delivers high-speed, low-power AI inference capabilities, enabling real-time processing for smart IoT, industrial automation, and mobile applications.(Source: https://www.bisinfotech.com)

Exclusive Insights

From an analysts perspective, it is clear the AI processor market is set for substantial growth, particularly as organizations are accelerating the adoption of digital transformation, and edge intelligence technologies. The increasing adoption of AI workloads in data centers, autonomous and robotics systems, and consumer electronics is driving demand for high-performance, energy-efficient processors.

However, significant challenges remain, including rising costs for chip fabrication, an ongoing shortage of chips in some categories, and a reliance on advanced foundries and manufacturing processes that are demanding and complex.

Moreover, there is competitive pressure from custom chips manufacturers, who are developing optimized architectures for specialized and emerging AI workloads. Regardless, there continues to be opportunities in neuromorphic-based computing and software, general AI, AI-on-device processing, and quantum-capable chips. Creating and maintaining collaborations and partnerships across the ecosystem will be critical to build innovation and ultimately sustaining long-term viability within the AI processor landscape.

AI Processor MarketSegments Covered in the Report

By Processor Type

- GPU (Graphics Processing Unit)

- CPU (Central Processing Unit)

- FPGA (Field Programmable Gate Array)

- ASIC (Application Specific Integrated Circuit)

- NPU (Neural Processing Unit)

- TPU (Tensor Processing Unit)

By Deployment Mode

- Cloud/Data Center

- Edge/On-Device

By Application

- Consumer Electronics

- Automotive

- Healthcare

- Industrial Automation

- Aerospace & Defense

- Retail & Robotics

By End-User Industry

- IT & Telecom

- Automotive

- Consumer Electronics

- Healthcare

- BFSI

- Industrial

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East & Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Tags

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client